ODI Helloworld tutorial- ODI Interface Table to Table data loading

In my previous posts, I have described about repositories and agents. Now lets get into the real implementation of data integration in ODI. In this blog, I will show the step by step process of implementing table to table integration in ODI.Since this is the first implementation (for the same reason i am calling it hello world tutorial), I will also show the topology configuration, creating models and starting with a project in ODI.

Topology Configuration

Creating table to table integration involves only creating a interface in ODI. But there are some perquisites before we get in there.

Step 1. Create a physical server and logical servers. If they exist already check step2. Physical Servers are the configuration which point to the physical connection security details for database (oracle, sql servers, sybase etc), File, JMS, XML, Complex files etc. We can create physical connection for each environment (Dev, Test, Production) . Logical server is what used to run the interface. Based on the environment currently the interface is run, logical server points to the respective physical server.

Step 2. Check model is already existing for the database tables we are going to use. Model is nothing but reverse engineering the database tables, view, AQs etc for use in ODI objects. Model is applicable for File, XML, Complex files and JMS also. Even for File,XML, Complex Files and JMS the model is created in a relational way (as table datastores) in ODI.

Once both the steps are complete, we can create the interface. In this example, I have three schemas in XE database.

1. Oracle seeded HR schema - Has all HR related tables for demo purposes.

2. ODI_STAGE schema - I have created a new schema. This i will use for holding the temporary objects created by ODI

3. DATA_TARGET - I have created a new schema. This schema, I will use to create target tables.

I have employees table in hr and data_target. hr schema employees table has some data but data_target employees table is empty. Using the interface I create in ODI, i will load the data from hr.employees to data_target.employees table. Also create a primary key constraint on the employee_id in target.

CREATE TABLE "EMPLOYEES"

( "EMPLOYEE_ID" NUMBER(6,0),

"FIRST_NAME" VARCHAR2(20 BYTE),

"LAST_NAME" VARCHAR2(25 BYTE) NOT NULL ENABLE,

"EMAIL" VARCHAR2(25 BYTE) NOT NULL ENABLE,

"PHONE_NUMBER" VARCHAR2(20 BYTE),

"HIRE_DATE" DATE NOT NULL ENABLE,

"JOB_ID" VARCHAR2(10 BYTE) NOT NULL ENABLE,

"SALARY" NUMBER(8,2),

"COMMISSION_PCT" NUMBER(2,2),

"MANAGER_ID" NUMBER(6,0),

"DEPARTMENT_ID" NUMBER(4,0)

);

Physical Server and Logical Server definition

After connecting to the work repository, go to topology tab.Under physical architecture section, right click on Technologies->Oracle->New data server

Create a data server connection odi_stage schema. This is the schema which is entry point for ODI. we can use any schema here for data server but it should have access to all other schemas and objects which we are dealing with for creating models, insert, select, update data.

Here I am using ODI_STAGE schema as data server and HR and DATA_TARGET as physical schemas.

In the definition tab, provide name and connection details for odi_stage schema.

Right click on the data server and select new physical schema. lets create a physical schema for HR and DATA_TARGET schemas. In the create physical schema window, select the main schema and the work schema (schema where odi creates work tables like loading, error, integration tables). we can also provide prefix convention for the error, integration, loading tables which ODI creates in the work schema ODI_STAGE. we can also configure the masks which are specific to the technology we use.

Work Tables:-

Error - This table by default created with E$ prefix and it stores the primary key of the record which errored out and its corresponding error.

Loading - This table is the exact copy of the source data before any joins are done. This by default has C$ prefix.

Integration - This table is used to check the integration constraints check for data errors

Lets create a physical schema for DATA_TARGET also with schemas as data_target and work schema as ODI_STAGE.

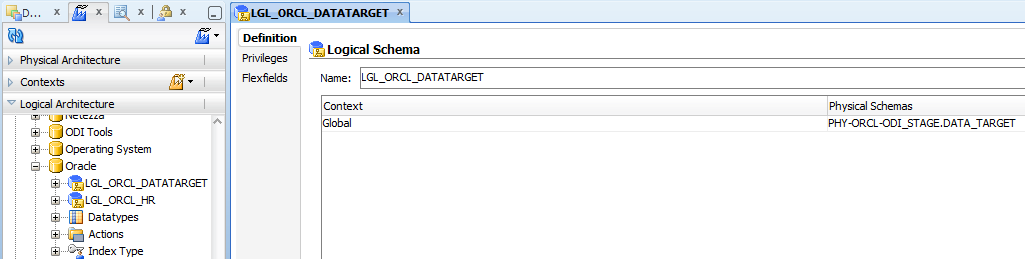

Lets create a logical schema for hr and data_target here which maps to physical schema based on environment In logical architecture section right click on Oracle and select create logical schema. Here we are mapping it to Global context. We can also create DEV, TEST and PRODUCTION context and point the respective physical schema for context (which is nothing but environment reference). Since this is demo, i am using the existing context which is global.

Creating the Model

Lets create a model for HR and DATA_TARGET Schemas now.Go to designer tab and under the models section, select New Model. Provide model name, technology as oracle, logical schema for HR.

Select the reverse engineer tab. Select the objects to reverse engineer here. we have two types of reverse engineering. we can use standard to standard reverse engineering. If we select customized, we would need to select reverse engineering knowledge module to reverse engineer the object. reverse engineering knowledge module is useful in case of reverse engineering Oracle Apps, Oracle BI etc which has some objects which needs specific methods to re-engineer in addition to regular jdbc reverse engineering. Select the reverse engineer button. ODI will take some time to reverse engineer the objects. In my case, it will take all the tables from HR schema and put it into the model. This will also fetch the constraints related to the tables.

This is how the model looks like. If we need to view data on the table, we right click and select view data on the table. In the model objects are referred with the term data store.

In the way, lets create a data model for the data_target schema. Both models HR and DATA_TARGET look like this after reverse engineering. data_target model has only one table and it is empty.

With this we have completed, configuring physical, logical schemas and creating the model.

Creating Project and Interface

Lets create a project in which we have have the table to table interface.

In the designer tab, Projects section, create a new project and provide a name. Project code is an important identifier which is used identify project specific objects as compared to global objects.

Expand the project which created, right click on knowledge modules and select import knowledge modules. we need to select three knowledge modules here for loading, integration and check constraint. Select CKM Oracle,LKM SQL to SQL and IKM SQL Incremental update. We need to select knowledge modules specific to the technology and kind of data loading we are doing. KM automates the process with predefined code for loading, integration, check constraints, reverse engineering and journalizing (change data capture).

1. LKM SQL to SQL - Automates the loading of data from source to staging. reads data from hr.employees and put it into odi_staging.c$_employees.

2. IKM SQL Incremental Update - Takes the data from staging and merges (insert or update) data to target.

3. CKM Oracle - Check for specific constraints we need to check after loading in target.

After selection, we can verify the imported KMs in knowledge modules section.

Lets create an interface now. Under project->folder right click on interfaces and select new interface.

Provide name for interface and select the schema to which we need to load.

Click on the mapping tab in interface. Drag the employees table from hr model to source area and drag employees table from data_target model to target area. drag the field from source to target to complete the mapping. select the employee id in target mapping and verify that key attribute check box is checked. if not we cannot enable flow control in IKM and so make sure that it is checked.

Go to overview tab, select staging is different from target so that staging tables are created in odi_staging schema.

Go to flow tab. Select staging in the diagram and select LKM SQL to SQL from the drop down. This LKM has only one option which is delete temporary objects. make this true so that C$ temporary table is deleted once target loading is complete.

Select target in the flow tab and select the IKM from the drop down. IKM provides few options which we configure based on our needs.

FLOW_CONTROL - checks the contraints while loading into the target. error records are placed into E$ table.

TRUNCATE - truncates the target table before loading into it.

DELETE ALL - delete the rows instead of truncate

STATIC_CONTROL - checks the constraints after loading into target table. But target table will still have the error records

RECYCLE_ERRORS - considers the rows from E$ table along with C$ table for integration loading into target so that if any records corrected, they will be recycled and loaded to target.

COMMIT - we can make this false if needed and we can do a seperate commit when this interface is part of a package so that we can maintain transaction for the objects used in ODI package.

CREATE_TARG_TABLE - creates target table if it doesn't exist at run time.

DELETE_TEMP_OBJECTS - deletes temporary I$ tables after target loading.

Select the controls tab and select the control knowledge modules. select the constraints to be enabled.

we have few options here to modify if any. we can ask ODI to drop the error table or check table here.

Save the interface.

With this we have completed creation of the interface. Lets run it now. Right click on the interface and click execute. Select gobal context, No agent and required log level. select ok. select ok on the session started dialog.

Go to operator tab to verify the run. expand all executions. Verify that our execution is successful. We can also expand the interface results and drill down to the steps executed at LKM, IKM and CKM levels and see the SQL generated and results here.

Lets verify the temporary tables created now. Go to the ODI_STAGING schema and verify the E$, check tables. If we make delete temporary objects to false in LKM and IKM options, we will see C$ and I$ tables also here.

Lets check the target table for data.

With this we have completed the table to table loading using ODI interface. Please let me know if there are any questions.

Hi,

ReplyDeleteThis is very nice information with screenshots .. but in between screenshots if enabled that would really be of great help ..

My source schema is HR - from Oracle Apps R12 database server

And Final target Schema is - DEV_BIPLATFORM which gets created while installing OBIEE 11g product.

I am stuck up with the blank table created on Target Schema to be viewed on ODI .. when Reverse Engineering is done its now showing as per the above given screen shot.. Any help will be well appreciated..

can the iformation with all screenshot be mailed on my mail ID please - rohan.soman@ktcss.com

This comment has been removed by the author.

ReplyDeleteThanks for the great publish. If you going through QuickBooks related Error then Dial dial Quickbooks Customer Service Phone Number.

ReplyDeleteErzurum

ReplyDeleteistanbul

Ağrı

Malatya

Trabzon

YGAB

whatsapp görüntülü show

ReplyDeleteücretli.show

DF1

https://titandijital.com.tr/

ReplyDeleteısparta parça eşya taşıma

ankara parça eşya taşıma

izmir parça eşya taşıma

diyarbakır parça eşya taşıma

PYY

giresun evden eve nakliyat

ReplyDeletebalıkesir evden eve nakliyat

maraş evden eve nakliyat

kastamonu evden eve nakliyat

kocaeli evden eve nakliyat

R7L

5BFC4

ReplyDeleteÇerkezköy Buzdolabı Tamircisi

Osmaniye Şehirler Arası Nakliyat

Düzce Şehir İçi Nakliyat

Mardin Şehir İçi Nakliyat

Rize Şehir İçi Nakliyat

Aksaray Şehirler Arası Nakliyat

Yozgat Evden Eve Nakliyat

Bartın Şehir İçi Nakliyat

Muğla Parça Eşya Taşıma

63CFF

ReplyDeleteKarabük Şehir İçi Nakliyat

Trabzon Parça Eşya Taşıma

Mardin Evden Eve Nakliyat

Kucoin Güvenilir mi

Muğla Şehirler Arası Nakliyat

Silivri Parke Ustası

Maraş Şehir İçi Nakliyat

Tunceli Evden Eve Nakliyat

Ordu Şehirler Arası Nakliyat

4625B

ReplyDeleteReferans Kimliği Nedir

Isparta Şehir İçi Nakliyat

Hatay Parça Eşya Taşıma

Kütahya Parça Eşya Taşıma

İzmir Şehirler Arası Nakliyat

Çankırı Parça Eşya Taşıma

Altındağ Fayans Ustası

Dxy Coin Hangi Borsada

Aion Coin Hangi Borsada

73E10

ReplyDeleteExpanse Coin Hangi Borsada

Samsun Lojistik

Siirt Şehir İçi Nakliyat

İstanbul Şehir İçi Nakliyat

Ankara Fayans Ustası

Rize Parça Eşya Taşıma

Bayburt Parça Eşya Taşıma

Bone Coin Hangi Borsada

Bitlis Şehir İçi Nakliyat

71B1A

ReplyDeletebinance indirim kodu %20

B101C

ReplyDeletebinance indirim kodu

AA9A9

ReplyDeletebinance indirim

96A49

ReplyDeleteücretsiz sohbet sitesi

ısparta rastgele görüntülü sohbet uygulamaları

çorum telefonda canlı sohbet

bartın rastgele sohbet

balıkesir görüntülü sohbet sitesi

maraş sohbet

muğla canli goruntulu sohbet siteleri

artvin canlı sohbet bedava

igdir rastgele canlı sohbet

06865

ReplyDeletediyarbakır rastgele sohbet

denizli sohbet odaları

istanbul parasız sohbet

amasya ücretsiz görüntülü sohbet uygulamaları

manisa kadınlarla ücretsiz sohbet

Tekirdağ Telefonda Sohbet

eskişehir görüntülü sohbet uygulamaları ücretsiz

edirne görüntülü sohbet sitesi

kadınlarla sohbet et

C3CB2

ReplyDeleteantep en iyi görüntülü sohbet uygulaması

Şırnak Canlı Sohbet Et

görüntülü sohbet canlı

Çorum Rastgele Sohbet Uygulaması

eskişehir rastgele sohbet odaları

giresun görüntülü sohbet yabancı

tokat parasız sohbet

Bursa Kadınlarla Sohbet Et

Osmaniye Mobil Sohbet

3D71C

ReplyDeleteLinkedin Takipçi Hilesi

Onlyfans Takipçi Hilesi

Referans Kimliği Nedir

Referans Kimliği Nedir

Arbitrum Coin Hangi Borsada

Coin Üretme Siteleri

Twitter Retweet Satın Al

Periscope Beğeni Satın Al

Parasız Görüntülü Sohbet

شركة تنظيف سجاد بابها 9u9SxtxUNS

ReplyDelete5C19BD7E90

ReplyDeleteeski mmorpg oyunlar

sms onay

mobil ödeme bozdurma

instagram takipci satin alma

-

Power BI Online Training

ReplyDeleteLearn Power Query, data modeling, and advanced DAX to create interactive dashboards and reports. Publish secure reports, implement row-level security, and schedule automated refreshes. Develop analytics skills to provide actionable business intelligence insights.

महाकालसंहिता कामकलाकाली खण्ड पटल १५ - कामकलाकाल्याः प्राणायुताक्षरी मन्त्रः

ReplyDeleteओं ऐं ह्रीं श्रीं ह्रीं क्लीं हूं छूीं स्त्रीं फ्रें क्रों क्षौं आं स्फों स्वाहा कामकलाकालि, ह्रीं क्रीं ह्रीं ह्रीं ह्रीं हूं हूं ह्रीं ह्रीं ह्रीं क्रीं क्रीं क्रीं ठः ठः दक्षिणकालिके, ऐं क्रीं ह्रीं हूं स्त्री फ्रे स्त्रीं ख भद्रकालि हूं हूं फट् फट् नमः स्वाहा भद्रकालि ओं ह्रीं ह्रीं हूं हूं भगवति श्मशानकालि नरकङ्कालमालाधारिणि ह्रीं क्रीं कुणपभोजिनि फ्रें फ्रें स्वाहा श्मशानकालि क्रीं हूं ह्रीं स्त्रीं श्रीं क्लीं फट् स्वाहा कालकालि, ओं फ्रें सिद्धिकरालि ह्रीं ह्रीं हूं स्त्रीं फ्रें नमः स्वाहा गुह्यकालि, ओं ओं हूं ह्रीं फ्रें छ्रीं स्त्रीं श्रीं क्रों नमो धनकाल्यै विकरालरूपिणि धनं देहि देहि दापय दापय क्षं क्षां क्षिं क्षीं क्षं क्षं क्षं क्षं क्ष्लं क्ष क्ष क्ष क्ष क्षः क्रों क्रोः आं ह्रीं ह्रीं हूं हूं नमो नमः फट् स्वाहा धनकालिके, ओं ऐं क्लीं ह्रीं हूं सिद्धिकाल्यै नमः सिद्धिकालि, ह्रीं चण्डाट्टहासनि जगद्ग्रसनकारिणि नरमुण्डमालिनि चण्डकालिके क्लीं श्रीं हूं फ्रें स्त्रीं छ्रीं फट् फट् स्वाहा चण्डकालिके नमः कमलवासिन्यै स्वाहालक्ष्मि ओं श्रीं ह्रीं श्रीं कमले कमलालये प्रसीद प्रसीद श्रीं ह्रीं श्री महालक्ष्म्यै नमः महालक्ष्मि, ह्रीं नमो भगवति माहेश्वरि अन्नपूर्णे स्वाहा अन्नपूर्णे, ओं ह्रीं हूं उत्तिष्ठपुरुषि किं स्वपिषि भयं मे समुपस्थितं यदि शक्यमशक्यं वा क्रोधदुर्गे भगवति शमय स्वाहा हूं ह्रीं ओं, वनदुर्गे ह्रीं स्फुर स्फुर प्रस्फुर प्रस्फुर घोरघोरतरतनुरूपे चट चट प्रचट प्रचट कह कह रम रम बन्ध बन्ध घातय घातय हूं फट् विजयाघोरे, ह्रीं पद्मावति स्वाहा पद्मावति, महिषमर्दिनि स्वाहा महिषमर्दिनि, ओं दुर्गे दुर्गे रक्षिणि स्वाहा जयदुर्गे, ओं ह्रीं दुं दुर्गायै स्वाहा, ऐं ह्रीं श्रीं ओं नमो भगवत मातङ्गेश्वरि सर्वस्त्रीपुरुषवशङ्करि सर्वदुष्टमृगवशङ्करि सर्वग्रहवशङ्करि सर्वसत्त्ववशङ्कर सर्वजनमनोहरि सर्वमुखरञ्जिनि सर्वराजवशङ्करि ameya jaywant narvekar सर्वलोकममुं मे वशमानय स्वाहा, राजमातङ्ग उच्छिष्टमातङ्गिनि हूं ह्रीं ओं क्लीं स्वाहा उच्छिष्टमातङ्गि, उच्छिष्टचाण्डालिनि सुमुखि देवि महापिशाचिनि ह्रीं ठः ठः ठः उच्छिष्टचाण्डालिनि, ओं ह्रीं बगलामुखि सर्वदुष्टानां मुखं वाचं स्त म्भय जिह्वां कीलय कीलय बुद्धिं नाशय ह्रीं ओं स्वाहा बगले, ऐं श्रीं ह्रीं क्लीं धनलक्ष्मि ओं ह्रीं ऐं ह्रीं ओं सरस्वत्यै नमः सरस्वति, आ ह्रीं हूं भुवनेश्वरि, ओं ह्रीं श्रीं हूं क्लीं आं अश्वारूढायै फट् फट् स्वाहा अश्वारूढे, ओं ऐं ह्रीं नित्यक्लिन्ने मदद्रवे ऐं ह्रीं स्वाहा नित्यक्लिन्ने । स्त्रीं क्षमकलह्रहसयूं.... (बालाकूट)... (बगलाकूट )... ( त्वरिताकूट) जय भैरवि श्रीं ह्रीं ऐं ब्लूं ग्लौः अं आं इं राजदेवि राजलक्ष्मि ग्लं ग्लां ग्लिं ग्लीं ग्लुं ग्लूं ग्लं ग्लं ग्लू ग्लें ग्लैं ग्लों ग्लौं ग्ल: क्लीं श्रीं श्रीं ऐं ह्रीं क्लीं पौं राजराजेश्वरि ज्वल ज्वल शूलिनि दुष्टग्रहं ग्रस स्वाहा शूलिनि, ह्रीं महाचण्डयोगेश्वरि श्रीं श्रीं श्रीं फट् फट् फट् फट् फट् जय महाचण्ड- योगेश्वरि, श्रीं ह्रीं क्लीं प्लूं ऐं ह्रीं क्लीं पौं क्षीं क्लीं सिद्धिलक्ष्म्यै नमः क्लीं पौं ह्रीं ऐं राज्यसिद्धिलक्ष्मि ओं क्रः हूं आं क्रों स्त्रीं हूं क्षौं ह्रां फट्... ( त्वरिताकूट )... (नक्षत्र- कूट )... सकहलमक्षखवूं ... ( ग्रहकूट )... म्लकहक्षरस्त्री... (काम्यकूट)... यम्लवी... (पार्श्वकूट)... (कामकूट)... ग्लक्षकमहव्यऊं हहव्यकऊं मफ़लहलहखफूं म्लव्य्रवऊं.... (शङ्खकूट )... म्लक्षकसहहूं क्षम्लब्रसहस्हक्षक्लस्त्रीं रक्षलहमसहकब्रूं... (मत्स्यकूट ).... (त्रिशूलकूट)... झसखग्रमऊ हृक्ष्मली ह्रीं ह्रीं हूं क्लीं स्त्रीं ऐं क्रौं छ्री फ्रें क्रीं ग्लक्षक- महव्यऊ हूं अघोरे सिद्धिं मे देहि दापय स्वाहा अघोरे, ओं नमश्चा ameya jaywant narvekar