Running Scenarios asynchronously/parallel in ODI

In this post, I will demonstrate how to run interfaces, procedures or packages asynchronously inside a ODI package.

By default for interfaces, procedures or packages, ODI doesn't provide an option to run them asynchronously (run it in parallel along with the initiating session) . To run them asynchronously, we need to covert interface, procedure or package to scenarios and scenarios can be run asynchronously inside a package or through command.

In this post, I will demonstrate how to asynchronously run the scenarios through package as well as through command.

1. In my tutorial, I am going to use five interfaces which does table to table loading for regions, countries, locations, departments and employees from hr schema to data_target schema.

2. I will convert these five interfaces to scenarios and run them asynchronously inside a package and through procedure command.

Following are the five interfaces, I will use in this tutorial. I will also use the Jython execution report procedure (created in my previous post Jython execution report) to write the execution report to file after the parallel run.

Lets convert all the interfaces to scenarios so that we can run them asynchronously. Right click on each interface and select Generate scenario.

1. Running the scenarios asynchronously inside a package

Lets create a package and drag and drop the generated scenarios into the package in sequence by connecting them with ok in the regions, countries, locations, departments and employees order. After employee, connect it to wait for child session and then to the execution report procedure.

wait for child session event detection will wait for all child sessions (asynchronous sessions) to finish before putting the control to the next step which execution report procedure here.

I have presented them vertically in the diagram since we are going to make them run in parallel. But we will connect them together with ok sequentially.

Lets configure each scenario in the package diagram to run asynchronously. Select each scenario and select the synchronous/asynchronous option to asynchronous for each scenario in the package. By default this mode is set to synchronous.

Also we can set options for the odi wait for child sessions event detection. If we don't specify a session id, it takes the current session id (which is the package in which it is running)

Keywords property can be used to wait for child sessions which match the selected keyword here. Keyword can be set for each scenario in its properties and we can group the scenarios with keywords and wait for child session will wait only for scenario child sessions which match the keyword.

Lets run the package and see the results. Run is successful.

We can see that child session is created for each asynchronous scenario run but no child session session created for the procedure, it is part of the parent session. Each parellel run is assigned a new session id which is child to the parent session id 29001.

Lets check the execution report to see the timing in which each interface triggered. we could see that all the scenarios started at the same time and waitforchildsession event detector waited until the last scenario parallel run has completed.

wait for child session event detection will wait for all child sessions (asynchronous sessions) to finish before putting the control to the next step which execution report procedure here.

I have presented them vertically in the diagram since we are going to make them run in parallel. But we will connect them together with ok sequentially.

Lets configure each scenario in the package diagram to run asynchronously. Select each scenario and select the synchronous/asynchronous option to asynchronous for each scenario in the package. By default this mode is set to synchronous.

Also we can set options for the odi wait for child sessions event detection. If we don't specify a session id, it takes the current session id (which is the package in which it is running)

Keywords property can be used to wait for child sessions which match the selected keyword here. Keyword can be set for each scenario in its properties and we can group the scenarios with keywords and wait for child session will wait only for scenario child sessions which match the keyword.

Lets run the package and see the results. Run is successful.

We can see that child session is created for each asynchronous scenario run but no child session session created for the procedure, it is part of the parent session. Each parellel run is assigned a new session id which is child to the parent session id 29001.

Lets check the execution report to see the timing in which each interface triggered. we could see that all the scenarios started at the same time and waitforchildsession event detector waited until the last scenario parallel run has completed.

2. Running the scenarios asynchronously using command in procedure

In this case, I want to solve a use case where we should be able to run any number of scenarios dynamically in parallel but we will come to know the scenarios only at run time.

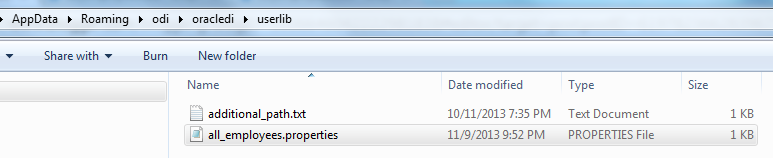

In this use case, a table will be created at run time with all the scenario names with versions which need to be run in parallel. We can simply read this table in procedure source section and run the scenario asynchronously in target section through procedure binding (executed the command in target section for each row from the source query).

This is how the dynamic table which stores the scenario name and version looks like.

Lets create the procedure with source and target sections.

Source runs the query on the dynamic table with scenario and version names.

For each from source query target start scenario command is executed in asynchronous mode for the scenario.

Lets run the procedure and verify the results. we can see that child session is created for each scenario and it ran in parallel.